Your Hadoop distributed file system architecture design images are ready in this website. Hadoop distributed file system architecture design are a topic that is being searched for and liked by netizens now. You can Find and Download the Hadoop distributed file system architecture design files here. Download all royalty-free vectors.

If you’re searching for hadoop distributed file system architecture design pictures information related to the hadoop distributed file system architecture design topic, you have pay a visit to the ideal blog. Our site always provides you with suggestions for seeking the maximum quality video and picture content, please kindly search and find more enlightening video articles and images that match your interests.

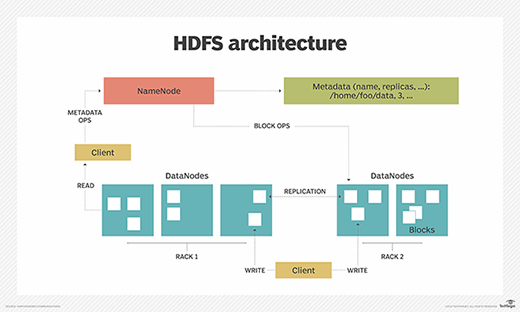

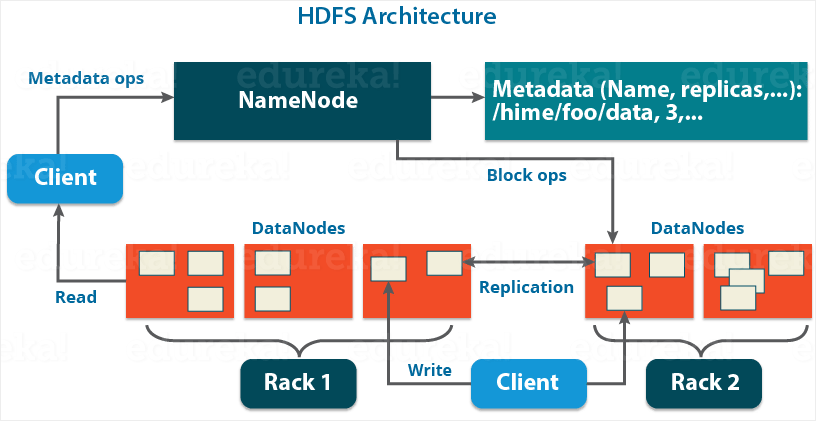

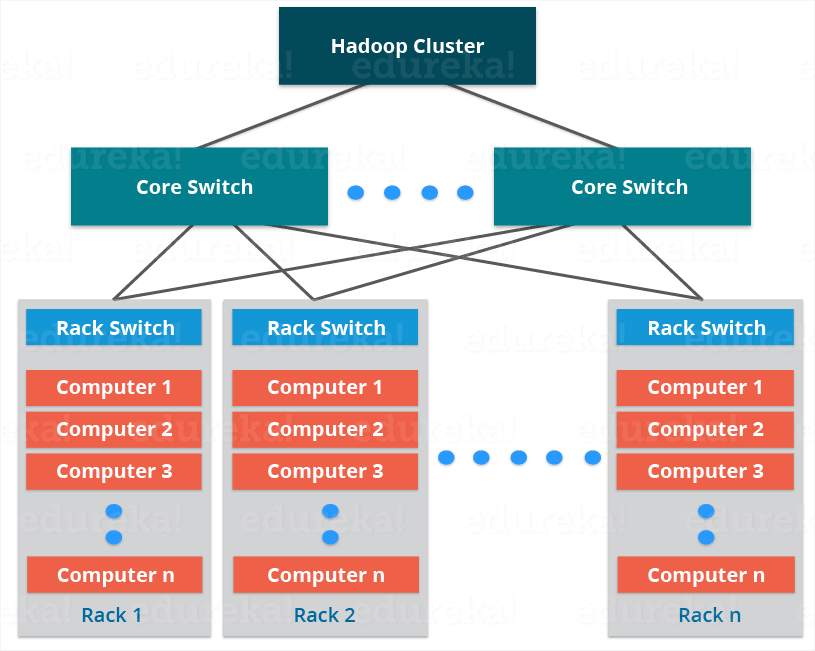

Hadoop Distributed File System Architecture Design. The Hadoop Distributed File System HDFS is designed to store very large data sets reliably and to stream those data sets at high bandwidth to user applications. However the differences from other distributed file systems are significant. HDFS does not support user quotas and access permissions and does not support links. In a large cluster thousands of servers both host directly attached storage and execute user application tasks.

Hadoop Distributed File System Ml Wiki From mlwiki.org

Hadoop Distributed File System Ml Wiki From mlwiki.org

It has many similarities with existing distributed file systems. The Hadoop Distributed File System HDFS is designed to be suitable for distributed file systems running on general-purpose hardware commodity hardware. However the differences from other distributed file systems are significant. It has many similarities with existing distributed file systems. It has many similarities with existing distributed file systems. At the same time it is obvious that it differs from other distributed file systems.

Fault-tolerant and is designed to be deployed on low-cost hardware.

This is where Hadoop comes in. However the differences from other distributed file systems are significant. HDFS provides high throughput access to. The Hadoop File System HDFS is as a distributed file system running on commodity hardware. However the current architecture does not rule out the implementation. HDFS does not support user quotas and access permissions and does not support links.

Source: pitchengine.com

Source: pitchengine.com

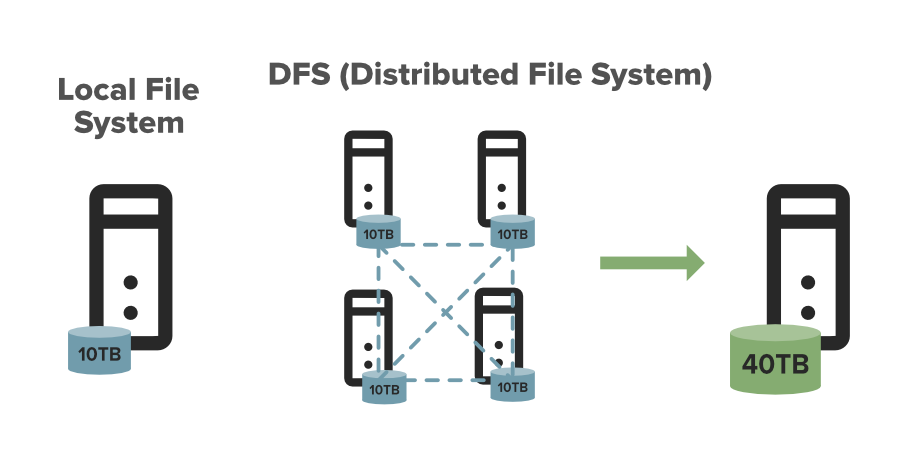

The Hadoop Distributed File System HDFS is designed to be suitable for distributed file systems running on general-purpose hardware commodity hardware. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. The Hadoop distributed file system HDFS is a distributed scalable and portable file system written in Java for the Hadoop framework. At the same time it is obvious that it differs from other distributed file systems. By distributing storage and computation across many servers the resource can grow with demand while remaining.

Source: researchgate.net

Source: researchgate.net

HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS is a highly fault-tolerant system and is suitable for deployment on cheap machines. Since low cost hardware commodity hardware is used in the cluster so chance of a node going dysfunctional is high. It has a lot in common with existing Distributed file systems. But at the same time it is quite different from other distributed file systems.

Source: plopdo.com

Source: plopdo.com

It mainly designed for working on commodity Hardware devicesinexpensive devices working on a distributed file system design. With this design what you expect from HDFS is- Fault tolerance. It has many similarities with existing distributed file systems. The Hadoop Distributed File System HDFS is designed to store very large data sets reliably and to stream those data sets at high bandwidth to user applications. The Hadoop File System HDFS is as a distributed file system running on commodity hardware.

Source: geeksforgeeks.org

Source: geeksforgeeks.org

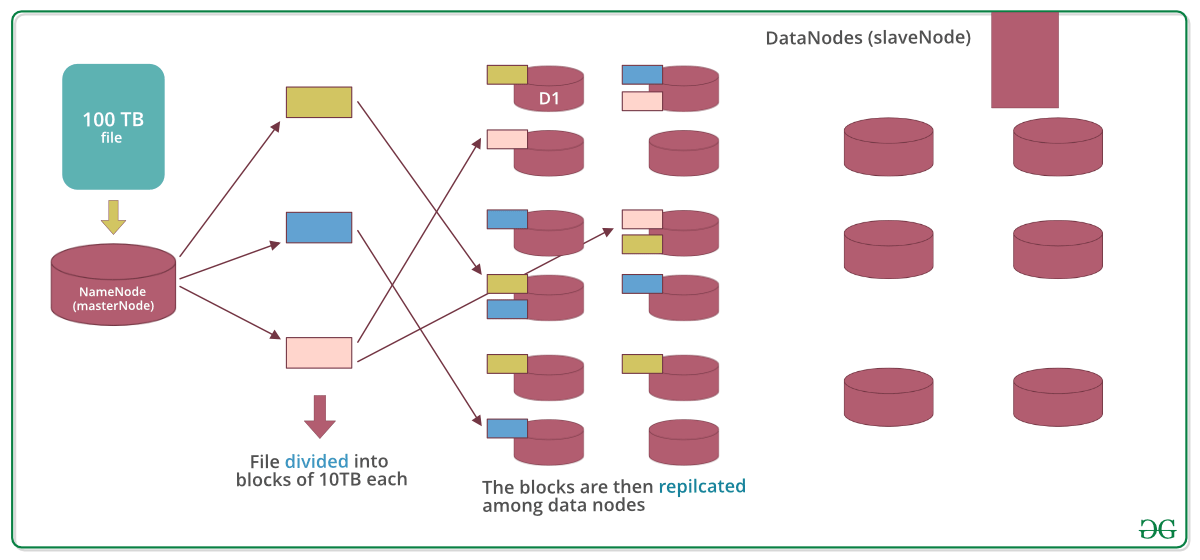

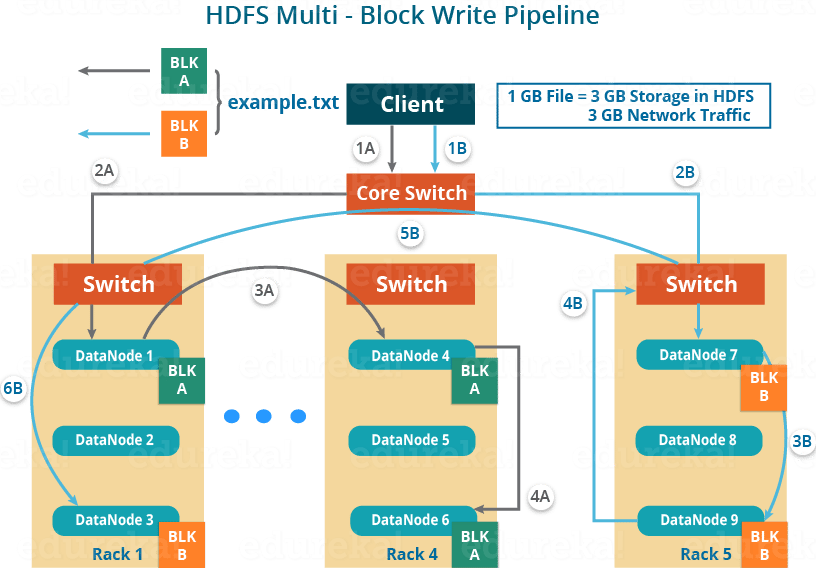

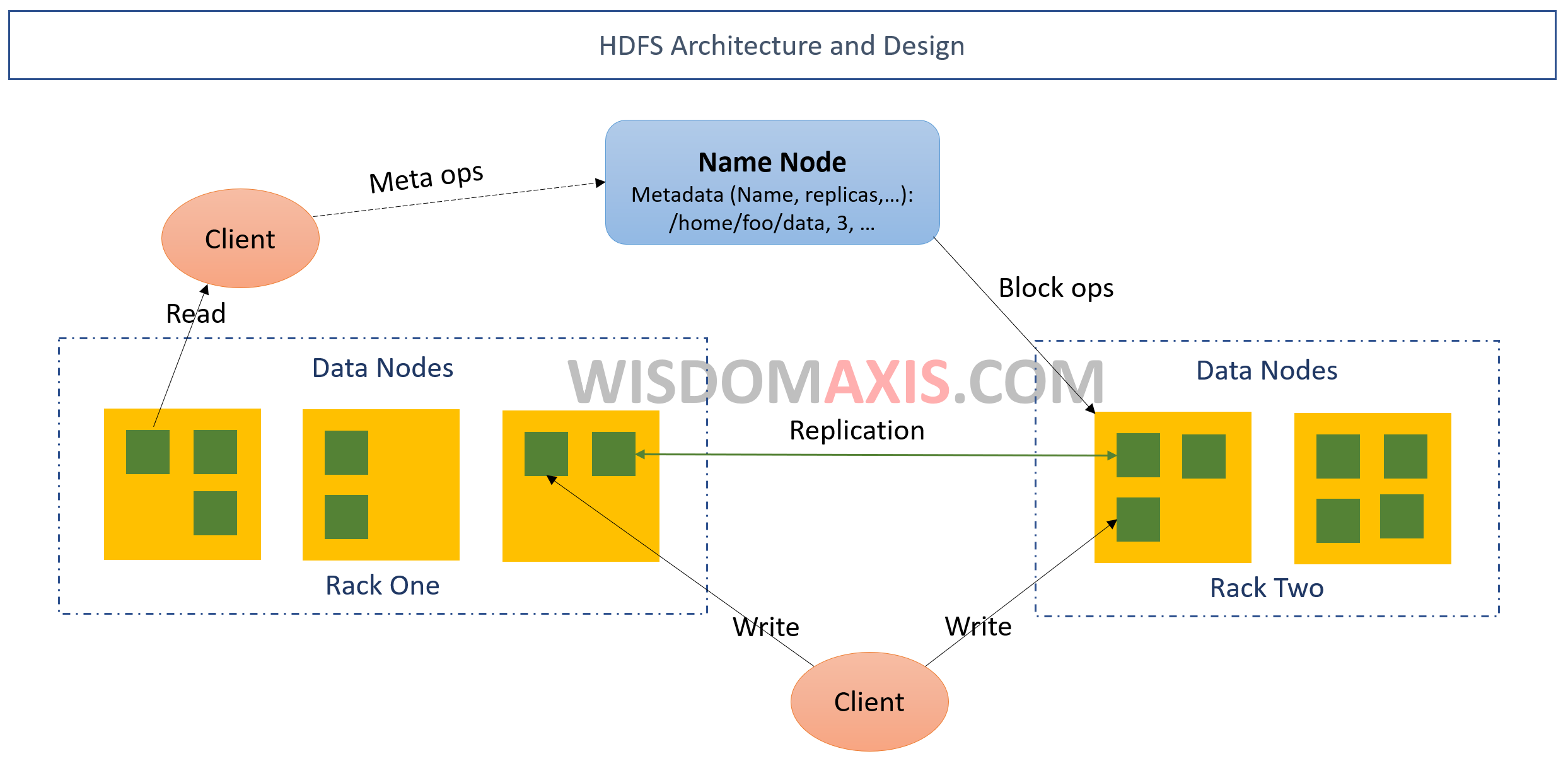

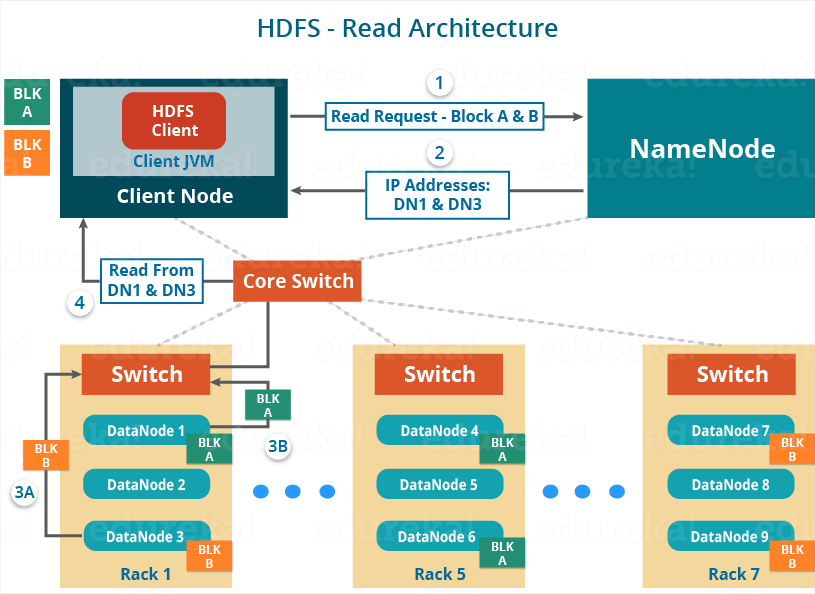

File System namespace HDFS supports traditional hierarchical file organizations. Also a block of data. HDFS provides high throughput access to application data and. The Hadoop File System HDFS is as a distributed file system running on commodity hardware. HDFS splits the data unit into smaller units called blocks and stores them in a distributed manner.

Source: mlwiki.org

Source: mlwiki.org

HDFSHadoop Distributed File System is utilized for storage permission is a Hadoop cluster. The Hadoop Distributed File System HDFS is designed to store very large data sets reliably and to stream those data sets at high bandwidth to user applications. In a large cluster thousands of servers both host directly attached storage and execute user application tasks. This is where Hadoop comes in. However the differences from other distributed file systems are significant.

Source: researchgate.net

Source: researchgate.net

The Hadoop Distributed File System HDFS is designed to store very large data sets reliably and to stream those data sets at high bandwidth to user applications. HDFS Hadoop Distributed File System is a unique design that provides storage for extremely large files with streaming data access pattern and it runs on commodity hardware. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS is highly fault-tolerant and can be deployed on low-cost hardware. However the current architecture does not rule out the implementation.

Source: geeksforgeeks.org

Source: geeksforgeeks.org

Hadoop Distributed File System. It has a lot in common with existing Distributed file systems. It has a lot in common with the existing distributed file system. HDFs is a highly fault tolerant system. HDFS is a highly fault-tolerant system and is suitable for deployment on cheap machines.

Source: edureka.co

Source: edureka.co

The Hadoop distributed file system HDFS is a distributed scalable and portable file system written in Java for the Hadoop framework. It has many similarities with existing distributed file systems. The Hadoop Distributed File System HDFS is a distributed file system designed to run on commodity hardware. The Hadoop distributed file system HDFS is a distributed scalable and portable file system written in Java for the Hadoop framework. HDFS provides high.

Source: edureka.co

Source: edureka.co

However the differences from other distributed file systems are significant. HDFs is a highly fault tolerant system. It has many similarities with existing distributed file systems. But at the same time it is quite different from other distributed file systems. Fault-tolerant and is designed to be deployed on low-cost hardware.

Source: yoyoclouds.wordpress.com

Source: yoyoclouds.wordpress.com

However the differences from other distributed file systems are significant. At the same time it is obvious that it differs from other distributed file systems. By distributing storage and computation across many servers the resource can grow with demand while remaining. In this post you will learn about the Hadoop HDFS architecture introduction and its design. HDFS provides high throughput access to application data and.

Source: ques10.com

Source: ques10.com

It has a lot in common with the existing distributed file system. HDFS splits the data unit into smaller units called blocks and stores them in a distributed manner. It has many similarities with existing distributed file systems. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware.

Source: tyazhin.info

Source: tyazhin.info

It has many similarities with existing distributed file systems. HDFSHadoop Distributed File System is utilized for storage permission is a Hadoop cluster. The Hadoop distributed file system HDFS is a distributed scalable and portable file system written in Java for the Hadoop framework. However the differences from other distributed file systems are significant. Hadoop HDFS Architecture Introduction.

Source: edureka.co

Source: edureka.co

The Hadoop Distributed File System HDFS is a Java based distributed file system designed to run on commodity hardwares. It has a lot in common with the existing distributed file system. In a large cluster thousands of servers both host directly attached storage and execute user application tasks. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. The Hadoop Distributed File System HDFS is a distributed file system designed to run on It has many similarities with existing distributed file systems.

Source: wisdomaxis.com

Source: wisdomaxis.com

It has many similarities with existing distributed file systems. However the differences from other distributed file systems are significant. Some consider it to instead be a data store due to its lack of POSIX compliance but it does provide shell commands and Java application programming interface API methods that are similar to other file systems. HDFS provides high. Also a block of data.

Source: developpaper.com

Source: developpaper.com

The Hadoop File System HDFS is as a distributed file system running on commodity hardware. It has many similarities with existing distributed file systems. HDFS is highly fault-tolerant and can be deployed on low-cost hardware. The Hadoop Distributed File System HDFS is a distributed file system designed to run on commodity hardware. It has a lot in common with existing Distributed file systems.

Source: edureka.co

Source: edureka.co

The Hadoop Distributed File System HDFS is designed to be suitable for distributed file systems running on general-purpose hardware commodity hardware. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. The Hadoop File System HDFS is as a distributed file system running on commodity hardware. Hadoop Distributed File System HDFSIs designed as a distributed file system suitable for running on a common hardware commodity hardware. Introduction The Hadoop Distributed File System HDFS is a distributed file system designed to run on commodity hardware.

Source: sciencedirect.com

Source: sciencedirect.com

File System namespace HDFS supports traditional hierarchical file organizations. Since data is stored across a network all the complications of a network come in. It has many similarities with existing distributed file systems. It has many similarities with existing distributed file systems. Fault-tolerant and is designed to be deployed on low-cost hardware.

Source: trinhdinhphuong.com

Source: trinhdinhphuong.com

HDFSHadoop Distributed File System is utilized for storage permission is a Hadoop cluster. The Hadoop Distributed File System HDFS is designed to be suitable for distributed file systems running on general-purpose hardware commodity hardware. Also a block of data. With this design what you expect from HDFS is- Fault tolerance. HDFS is designed to support large files by large here we mean file size in gigabytes to terabytes.

This site is an open community for users to share their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site serviceableness, please support us by sharing this posts to your preference social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title hadoop distributed file system architecture design by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.